UN Tests AI Avatars to Simulate Refugees and Combatants

The United Nations is experimenting with AI-generated avatars to educate people about the crisis in Sudan, sparking ethical debates.

The United Nations University Center for Policy Research (UNU-CPR) has developed AI avatars named Amina and Abdalla to simulate the experiences of a Sudanese refugee and a combatant leader, respectively. The project aims to educate people about the humanitarian crisis in Sudan but has sparked controversy over the ethics of using AI to represent real human suffering.

The AI Avatars

- Amina: A digital representation of a woman living in a refugee camp in Chad after fleeing violence in Sudan. Test Amina here.

- Abdalla: A simulated combatant leader from the Rapid Support Forces (RSF), a group accused of ethnic cleansing in Darfur. Test Abdalla here.

Ethical Concerns

The project has faced backlash from humanitarian organizations and nonprofits. Critics argue that AI avatars risk dehumanizing refugees and sanitizing their experiences. One workshop participant questioned, "Why would we want to present refugees as AI creations when there are millions of refugees who can tell their stories as real human beings?"

Project Goals

Eleanore Fournier-Tombs, a data scientist at UNU-CPR, emphasized the need for the UN to explore AI's ethical implications proactively. Eduardo Albrecht, the project's lead, described the avatars as a "straw man" to provoke discussion about AI's role in humanitarian work.

Technical Details

The avatars were created using HeyGen, which relies on OpenAI's GPT-4o mini and Retrieval-Augmented Generation (RAG) to animate the avatars. Amina scored 80% accuracy in answering questions based on real refugee surveys, but the paper acknowledges limitations due to undisclosed training data.

Future Applications

The UNU-CPR paper speculated that AI avatars could one day assist in donor communications or negotiation training. However, participants warned against replacing real refugee voices with AI simulations.

Conclusion

While the project highlights AI's potential in humanitarian contexts, it also underscores the need for careful ethical consideration. As Albrecht put it, "You have to go on a date with someone to know you don’t like ‘em."

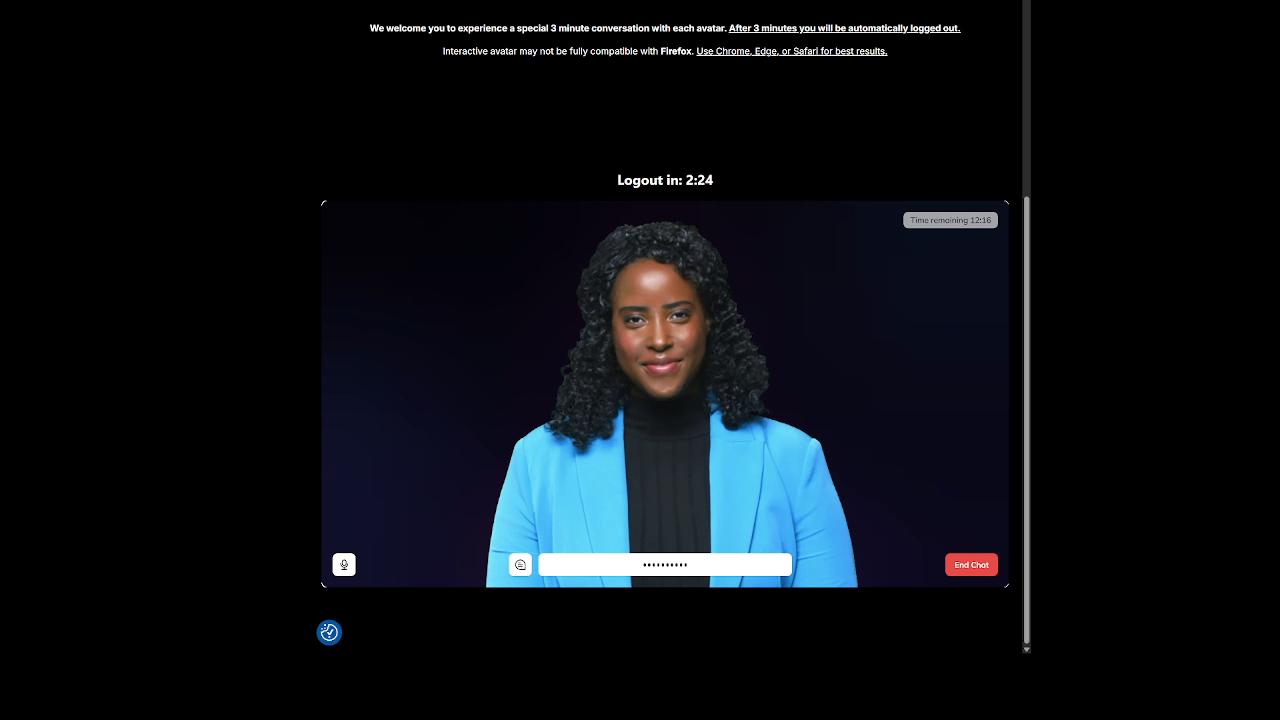

One of the conversations with 'Amina.'

One of the conversations with 'Amina.'

![]() One of the conversations with 'Abdalla.'

One of the conversations with 'Abdalla.'

Related News

AWS extends Bedrock AgentCore Gateway to unify MCP servers for AI agents

AWS announces expanded Amazon Bedrock AgentCore Gateway support for MCP servers, enabling centralized management of AI agent tools across organizations.

CEOs Must Prioritize AI Investment Amid Rapid Change

Forward-thinking CEOs are focusing on AI investment, agile operations, and strategic growth to navigate disruption and lead competitively.

About the Author

Dr. Sarah Chen

AI Research Expert

A seasoned AI expert with 15 years of research experience, formerly worked at Stanford AI Lab for 8 years, specializing in machine learning and natural language processing. Currently serves as technical advisor for multiple AI companies and regularly contributes AI technology analysis articles to authoritative media like MIT Technology Review.