NVIDIA RTX AI PCs Boost AnythingLLM Performance With NIM Support

NVIDIA RTX GPUs and NIM microservices accelerate AnythingLLM, enabling faster local LLM workflows for AI enthusiasts.

Large language models (LLMs) are revolutionizing AI applications, from chatbots to code generators. One of the most accessible tools for working with LLMs is AnythingLLM, a privacy-focused desktop app designed for AI enthusiasts. With new support for NVIDIA NIM microservices on GeForce RTX and NVIDIA RTX PRO GPUs, AnythingLLM now delivers even faster performance for local AI workflows.

What Is AnythingLLM?

AnythingLLM is an all-in-one AI application that enables users to run local LLMs, retrieval-augmented generation (RAG) systems, and agentic tools. It bridges the gap between LLMs and user data, offering features like:

- Question answering: Get answers from top LLMs like Llama and DeepSeek R1 without costs.

- Personal data queries: Privately query PDFs, Word files, and more using RAG.

- Document summarization: Generate summaries of lengthy research papers.

- Data analysis: Extract insights by querying files with LLMs.

- Agentic actions: Dynamically research content and run generative tools.

AnythingLLM supports a wide range of open-source LLMs and cloud-based models from OpenAI, Microsoft, and Anthropic. Its intuitive interface and one-click install make it ideal for AI enthusiasts.

RTX Acceleration and NIM Integration

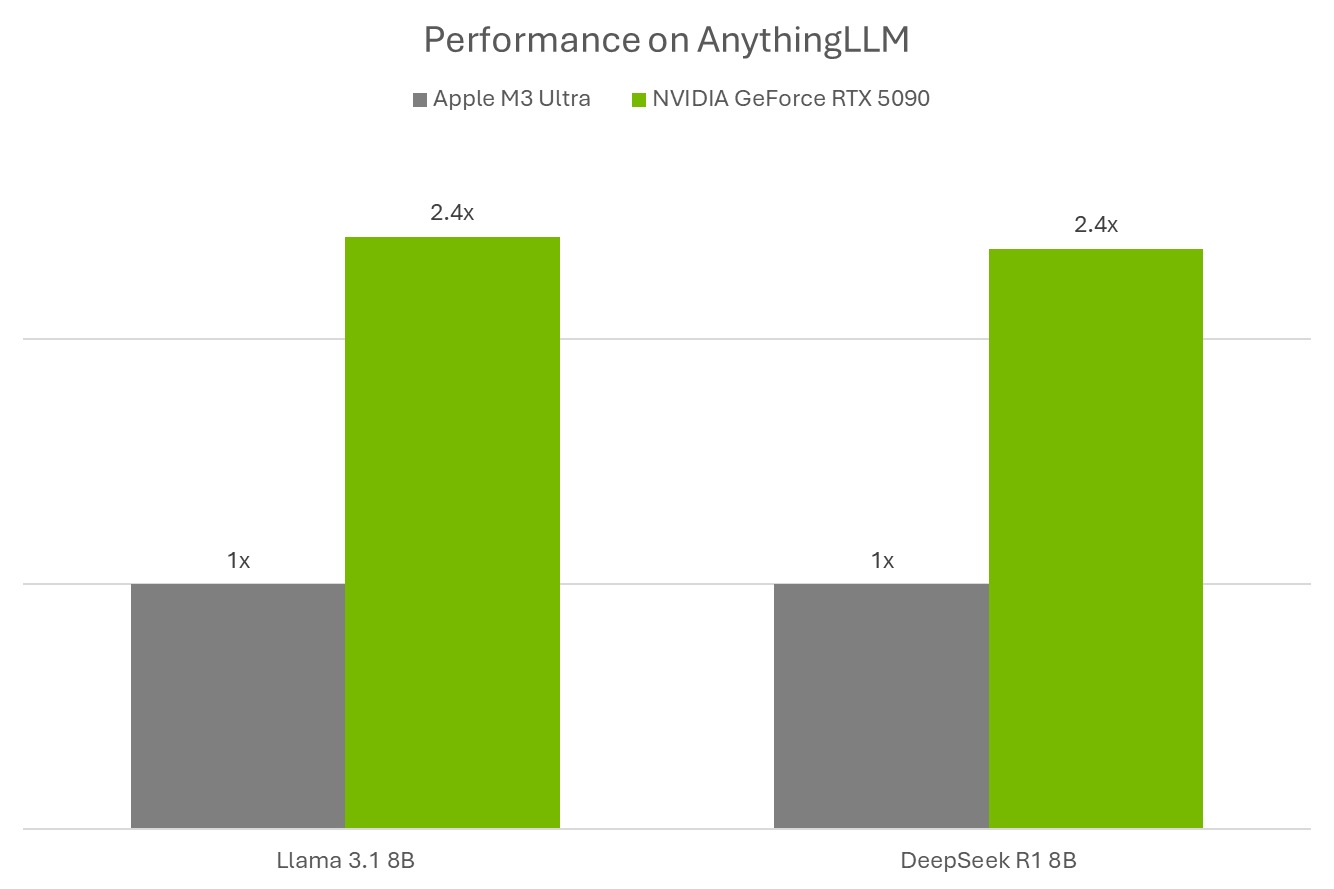

GeForce RTX and NVIDIA RTX PRO GPUs significantly boost AnythingLLM performance, leveraging Tensor Cores for faster AI inference. The app uses Ollama, Llama.cpp, and GGML libraries, optimized for RTX GPUs. Performance tests show the GeForce RTX 5090 is 2.4x faster than an Apple M3 Ultra on models like Llama 3.1 8B and DeepSeek R1 8B.

NVIDIA NIM microservices simplify AI workflow setup by providing pre-packaged generative AI models with streamlined APIs. These microservices are now integrated into AnythingLLM, allowing users to test and deploy models effortlessly. Developers can also leverage NVIDIA AI Blueprints for additional use cases.

Future Prospects

As NVIDIA expands its NIM microservices and AI Blueprints, tools like AnythingLLM will unlock more multimodal AI applications. The RTX AI Garage blog series continues to highlight community-driven innovations, offering insights into building AI agents and creative workflows on RTX-powered systems.

Related News

AWS extends Bedrock AgentCore Gateway to unify MCP servers for AI agents

AWS announces expanded Amazon Bedrock AgentCore Gateway support for MCP servers, enabling centralized management of AI agent tools across organizations.

CEOs Must Prioritize AI Investment Amid Rapid Change

Forward-thinking CEOs are focusing on AI investment, agile operations, and strategic growth to navigate disruption and lead competitively.

About the Author

David Chen

AI Startup Analyst

Senior analyst focusing on AI startup ecosystem with 11 years of venture capital and startup analysis experience. Former member of Sequoia Capital AI investment team, now independent analyst writing AI startup and investment analysis articles for Forbes, Harvard Business Review and other publications.