LM Studio Boosts LLM Performance Using NVIDIA GeForce RTX GPUs and CUDA 12.8

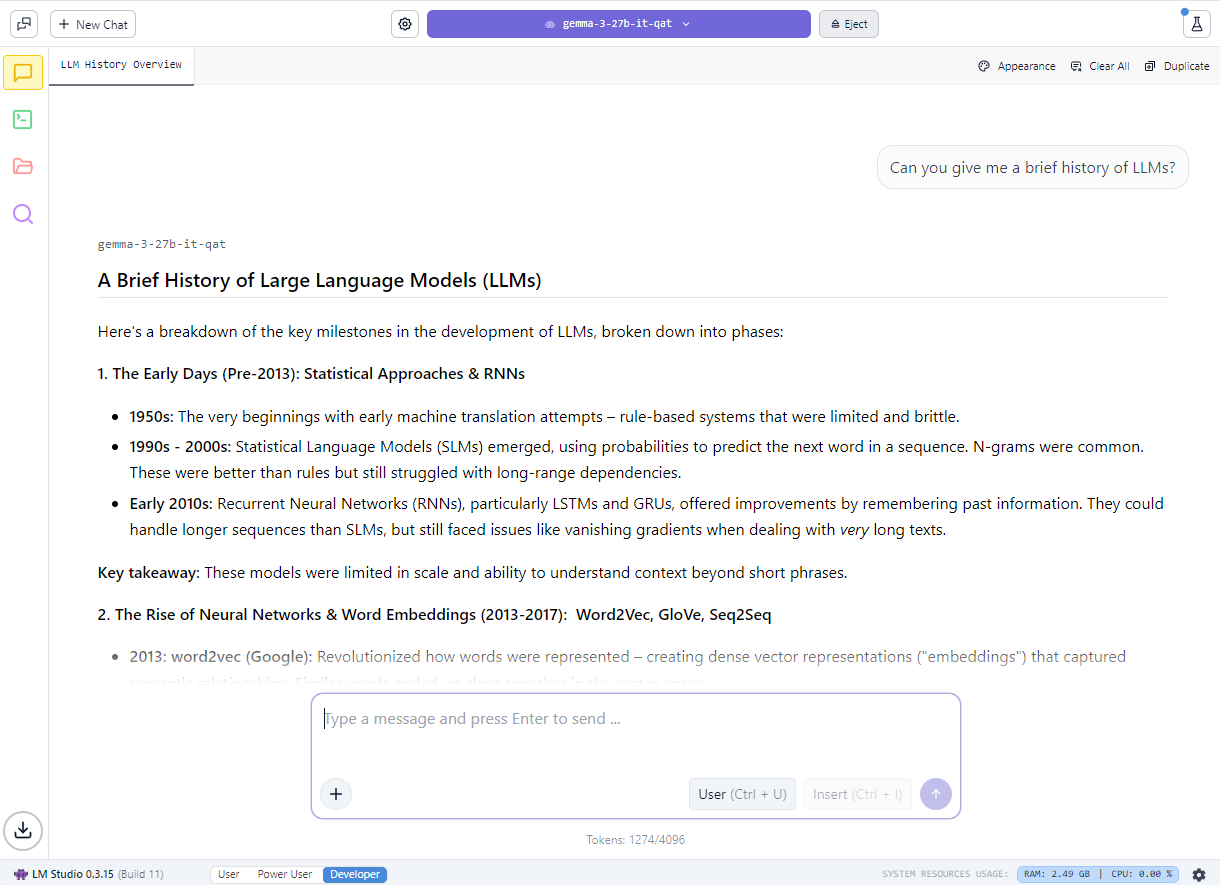

The latest version of the desktop application offers improved developer tools, model controls, and enhanced performance for RTX GPUs.

As AI applications grow—from document summarization to custom software agents—developers and enthusiasts are seeking faster, more flexible ways to run large language models (LLMs). Running models locally on PCs with NVIDIA GeForce RTX GPUs enables high-performance inference, better data privacy, and full control over AI deployment. LM Studio, a free tool, simplifies local LLM experimentation and integration.

Key Improvements in LM Studio 0.3.15

The latest release, LM Studio 0.3.15, delivers:

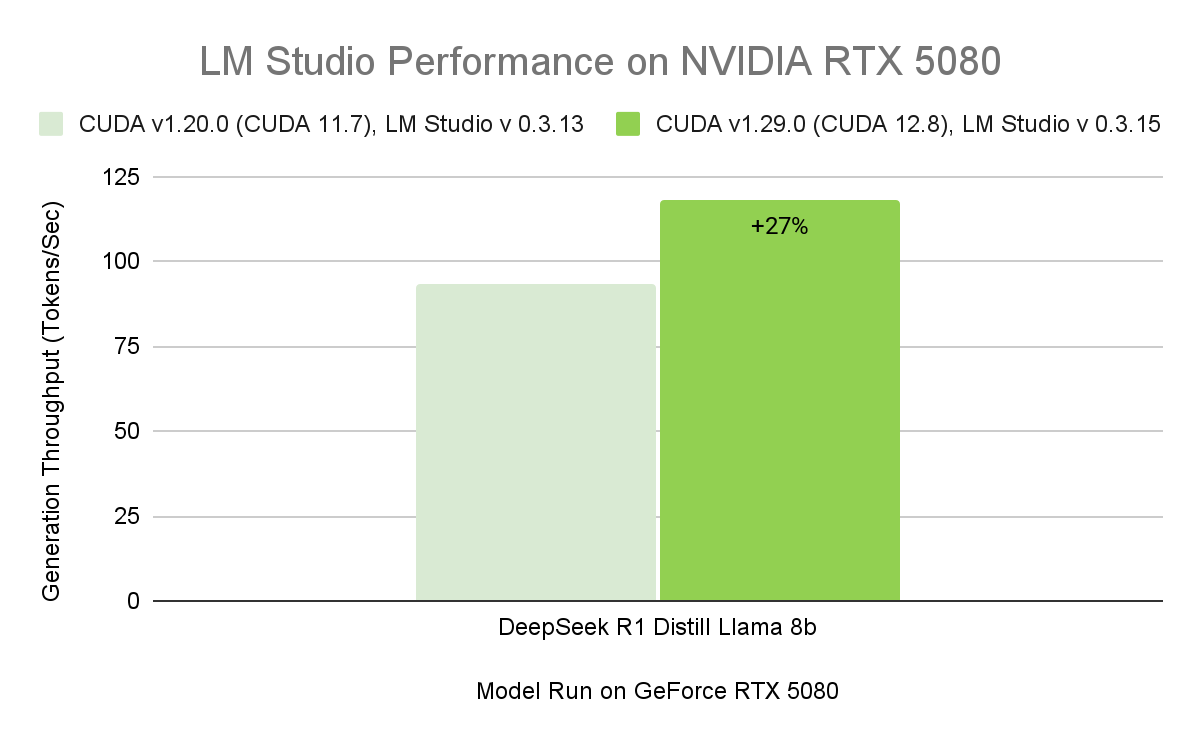

- Faster performance for RTX GPUs via CUDA 12.8, reducing model load and response times.

- New developer features, including granular control over tool use (

tool_choice) and an upgraded system prompt editor. - Optimized llama.cpp runtime, with NVIDIA-backed enhancements like CUDA graph enablement (up to 35% throughput boost) and flash attention CUDA kernels (15% faster processing).

Flexibility for Developers and Users

LM Studio supports:

- Open models like Gemma, Llama 3, Mistral, and Orca.

- Multiple quantization formats, from 4-bit to full precision.

- Local API endpoints, enabling integration with apps like Obsidian via community plugins (Text Generator, Smart Connections).

Getting Started

- Download LM Studio for Windows, macOS, or Linux.

- Install the CUDA 12 llama.cpp runtime for optimal GPU performance.

- Enable Flash Attention and GPU offloading in settings for maximum throughput.

Related News

AWS extends Bedrock AgentCore Gateway to unify MCP servers for AI agents

AWS announces expanded Amazon Bedrock AgentCore Gateway support for MCP servers, enabling centralized management of AI agent tools across organizations.

CEOs Must Prioritize AI Investment Amid Rapid Change

Forward-thinking CEOs are focusing on AI investment, agile operations, and strategic growth to navigate disruption and lead competitively.

About the Author

Michael Rodriguez

AI Technology Journalist

Veteran technology journalist with 12 years of focus on AI industry reporting. Former AI section editor at TechCrunch, now freelance writer contributing in-depth AI industry analysis to renowned media outlets like Wired and The Verge. Has keen insights into AI startups and emerging technology trends.